Introduction to Threat Modeling: What Can Go Wrong?

Threat modeling is an essential practice for understanding potential security risks. We’ll discuss how imagining worst-case scenarios, like a casino breach via a smart fish tank thermometer, can help in anticipating and mitigating threats.

This presentation was delivered at the ivision Security Symposium 2025. This post is based on a transcript of that presentation, and has been edited for clarity.

My name is Roman Faynberg, and I am a consultant in the ivision security assessment practice. I have been breaking things, and breaking into things, since around 2007.

As I begin my presentation today, I have a quick question…

What can go wrong here? There are many things I could point out, but they’re pretty obvious.

Another question: what can go wrong here?

In 2017 bad actors used a fish tank to breach a casino. They didn’t target the vault or the slot machines. They targeted a smart thermometer in that fish tank, compromised it, and exfiltrated gigabytes of data. The device was configured with a VPN to call outside, and they siphoned the data out to Finland. The question I’d like to pose is: how could the casino have seen it coming? What could they have thought about? Threat modeling is really about imagining how bad things could happen before they happen.

How would you design a bank knowing there will be a heist? Would you place an entrance door right next to the vault? Would you give a spare copy of the keys to everyone on the team, including the cleaning staff? Probably not. These are the kinds of questions you need to ask when you know bad things can happen.

Threat modeling is the art of finding as many answers as possible to the questions what can go wrong and what can we do about it? Remember that threat modeling is different from searching for vulnerabilities. A vulnerability is just one way to realize a threat, but the threat exists regardless.

Why is threat modeling important, and why is it more important now than before? Systems have been getting increasingly complex; that is the nature of technological progress. As complexity rises, the attack surface grows. There are many more ways for adversaries to reach our systems because everything is connected. Smart devices are everywhere. IIoT gadgets, maybe even a slightly angry smart iron, all talk to the internet. These devices might be on your network already.

We also have large language models and other forms of artificial intelligence that we use more and more. Customer service agents are often implemented with LLMs these days. What could go wrong with those?

Not only is the attack surface growing, but the barrier to entry for attackers keeps dropping. AI can write very convincing phishing emails. As we’ve seen in recent security assessments, AI now produces excellent phishing content, and that is exactly what we are seeing. In five to fifteen minutes I can spin up a completely fake company, with fabricated executive biographies and team stories, ready to use in a phishing exercise aimed at infiltrating an organization. It is very easy to do.

The barrier to entry for attackers is getting lower, which means it is easier and cheaper to design securely from the start rather than fix things later. Threat modeling helps you understand a complex system better, formalizes that understanding, and encourages you to think like an attacker. Thinking like an attacker leads to a more secure design.

Is threat modeling free of challenges? No. Sometimes it is perceived as low-utility. Some people call it a tedious exercise: why spend hours discussing what might happen? Did you find or fix a vulnerability? No. So what did you do? That question misses the point. The goal is to find what can go wrong so you can plan to address it later.

Another issue is keeping the threat model up to date. It is only as useful as it is current. Technology, features, systems, infrastructure, and applications all change, and the associated threats change with them. The model must be a living document; it loses value when abandoned.

Often threat modeling is shunned because of its perceived low value, and that is unfortunate. In this presentation I hope to show exactly how it can be useful and how it can be applied in real-world scenarios to make whatever you are building more secure.

There are many different ways to approach threat modeling. There are a lot of frameworks, and they are organized to help you think about a few key questions: what are you trying to protect, who are you trying to protect it against, and what attack vectors could be used to reach those assets.

You do not need to memorize any of these frameworks, but one long-standing example is Microsoft’s STRIDE model. It has been around since the 1990s and remains relevant because, instead of focusing on specific vulnerabilities, it emphasizes core concepts. STRIDE is really the cornerstone of threat modeling and offers a structured way to think about threats.

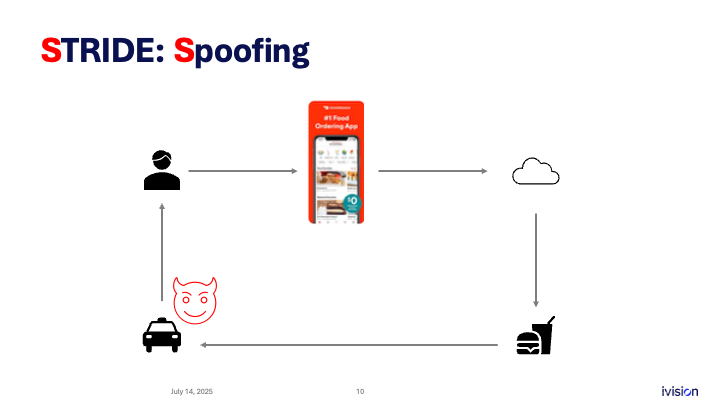

I wanted to pick a food delivery app, so I grabbed a screenshot of DoorDash. It’s a very prominent app, so it was a logical example. Disclaimer: we’re not looking for or discussing specific vulnerabilities. This is about potential threats in a typical food-delivery application like DoorDash.

You place an order through the mobile app. The mobile app talks to the cloud. Something magical happens in the cloud: the system contacts the restaurant, the restaurant prepares the food, the food is handed to the driver, and the driver hands it to you.

What threats can we identify? This is where STRIDE comes in. STRIDE is an acronym: S is for spoofing. Spoofing means pretending to be someone you’re not.

So what if someone pretends to be someone they’re not, decides to pose as a delivery driver, and somehow gets close to your home, for example? That’s a threat.

From an infrastructure perspective, what if spoofing happens through a flaw in the identity provider you are using? That might be another threat. Maybe that flaw lets an attacker create and sign identities with signatures that haven’t been authorized, yet your system still trusts them. That is another spoofing flaw.

Thinking about what can happen here is part of the threat model.

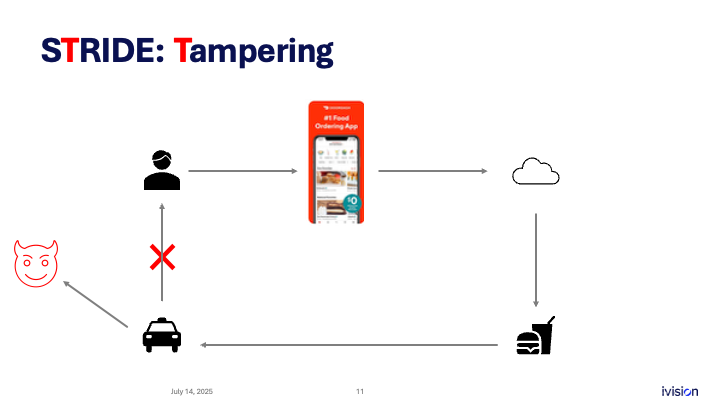

T stands for tampering. In this simple example, an attacker manages to redirect the order so that one person places it, but the delivery goes to someone else. The original person still pays.

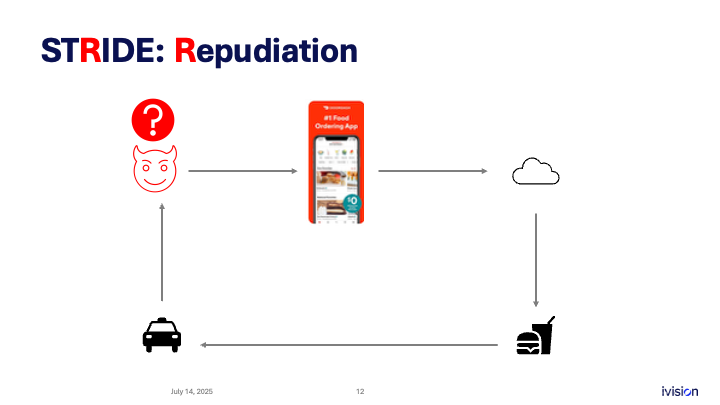

R is for repudiation. Repudiation is disassociating yourself from any particular action. In the context of this system, we could say that if I place an order, get the food, and then claim I never received it so I don’t want to pay, I might fool the system into believing me. That could cause a loss of revenue, making it a potential issue. This is a repudiation threat - being able to avoid responsibility for an action or a ledger entry.

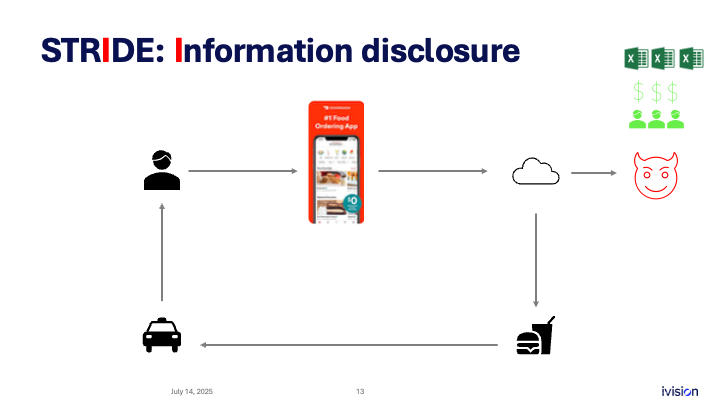

I stands for information disclosure. There are several ways information can be exposed: through an application flaw or an infrastructure flaw. Payment data can be leaked, and that is a threat. An attacker might even download personal details in bulk from your system.

We already covered LLMs and AI. Imagine the system includes a customer-service support agent that helps with orders. If I interact with this AI agent and launch a prompt-injection attack, I could make it return information belonging to another user. Doing that in bulk could cause a major data leak.

From an infrastructure perspective, think of a cloud deployment. Unsecured storage buckets that hold transaction data are another threat. If you fail to secure them, you are exposed. Walking through scenarios like these helps you recognize information disclosure threats.

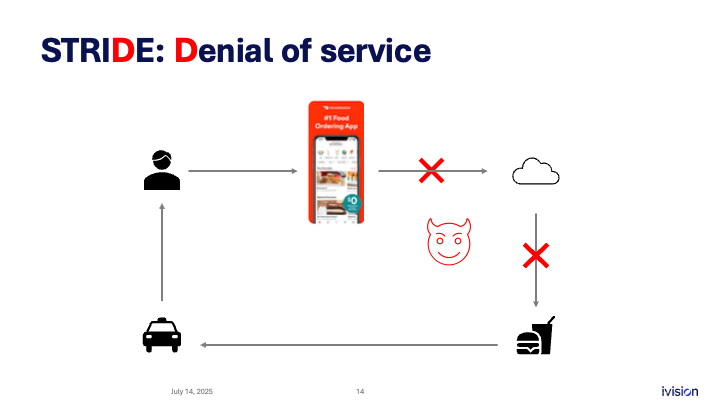

D is for Denial of service. Denial of service is the ability of an attacker to make it so that no one can place orders or interact with the system.

There are different ways to think about threats associated with denial of service.

First, there is always the possibility of a DDoS, a distributed denial of service attack. You can protect against it with load balancing and related techniques.

But there are other threats. Sometimes an attacker can crash the system cheaply by exploiting a bug in the infrastructure. This can be more dangerous because it does not require an army of bots.

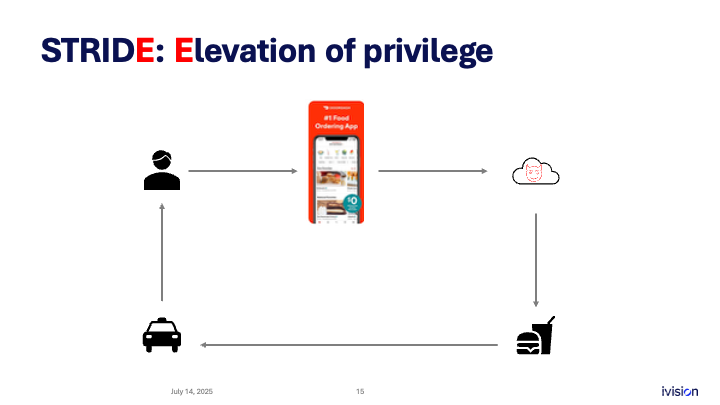

Finally, E stands for elevation of privilege. Here we consider an attacker getting into administrative functionality. What if someone becomes a cloud administrator? How could that happen? Think through every possibility as part of threat modeling:

- gaining admin access through a phishing email

- leaked cloud credentials

- source code that contains credentials

- a misconfigured cloud deployment that exposes an interface on the internet

From the infrastructure side, many companies deploy web application firewalls. These systems often redirect traffic to themselves, inspect it, then forward it to your APIs or web servers. This setup assumes the attacker is always routed through the provider’s system. However, if you forget to lock down the IP filters, an attacker can bypass the firewall and hit your APIs directly. That misconfiguration is another scenario that can lead to an elevation of privilege.

So this is all good and great, but the question now becomes: what is the tangible output of a threat model?

Ideally, the output is literally a list of things that can happen, along with information about whether each risk is mitigated. You also gain valuable visibility into how the system operates, which is especially important for security teams. You can build an architecture diagram, map data flows, and understand system inputs and outputs.

Through this process you understand the risks in the system and how those risks are or are not mitigated. Unmitigated risks, especially critical ones, should bubble up to a risk register. I highly recommend keeping that register as a visible document in the organization because it is a perfect place to track key threats associated with any system, organization, or application.

Now, who should be doing threat modeling? Is threat modeling only for security people? No, it is not. Threat modeling is for anyone who is building anything: architects, engineers, and business product owners. Anyone working with a system knows the product inside and out. The best abuse cases I have seen have come from product owners and business folks; they understand how the product will be used and how it can be misused. You do not need to know every answer about how an attack might occur, but it is already valuable if you simply ask the right questions.

Threat modeling prevents critical bugs. In one case study, we worked with a team of engineers building an e-commerce product. During the threat-modeling sessions we discovered a design flaw that relied on static unique identifiers for authorization. We demonstrated how an attacker could enumerate those identifiers; if they leaked, the system would be only a step away from a major breach. Because we identified the issue early, the team fixed the design before it reached production, and the critical bug was avoided.

We built a threat model for a large AWS deployment meant to support predictive agriculture. The project involved sensitive and expensive assets: the computational model, its intellectual property, and related agricultural data.

We created a full formal threat model, produced diagrams, and mapped how everything worked. This process revealed an infrastructure bottleneck in the computational system that could have let attackers cause an inexpensive denial-of-service against customers.

Because we discovered the issue before the service went live, the client could fix it in advance. Threat modeling let the security team focus their limited resources where they mattered most.

One very recent example came when a customer asked us to test an application running on an air-gapped appliance. That seemed unusual, so I suggested an informal threat model to discuss everything they were worried could go wrong.

We walked through the setup and, as a result of the threat model, we redirected the effort to save time and money. It became clear that testing the application itself was less valuable because the key question was how the appliance was protected from an infrastructure perspective. The customer chose a lighter, more efficient network test instead of a full application test. Threat modeling not only helps prioritize resources for software security, it makes work in general far more efficient.

One example: a customer asked us to build a threat model for a shared-responsibility community development project. They were writing code for the project and wanted us to focus on that specific code, which we did.

Although the threat model initially targeted the code itself, in the process of threat modeling, we redirected the focus because the real problems were in how they were writing the code, not what they were writing. We identified potential issues in the code development and deployment pipeline and pointed out areas they might want to address.

-

Internally we build threat models for our own practice so we understand how to protect your data and infrastructure we may have access to, along with all the potential abuse cases and ways we ourselves could be attacked.

-

Externally on large infrastructure deployments such as lift-and-shift projects, where many moving parts create a large attack surface, we are incorporating threat modeling in parallel to ensure we design and build securely and account for every abuse case.

I understand that threat modeling is not the first thing you think about each day; client-facing features and new functionality often seem more urgent, yet threat modeling is still vital for moving the business forward.

I want to leave you with this: what if we spent a little bit of time on an activity that is extremely helpful in preventing future vulnerabilities, threat modeling. It would be even better if it were part of a formal software security development lifecycle, though I understand that is not always possible. Ultimately, if you do threat modeling, you end up with a more complete threat model and a more trusted system. Will it eliminate every new vulnerability? Of course not, but it will reduce them, and that might be the best realistic outcome.

Threat modeling boils down to asking what can go wrong?